Abstract layers for dealing with primitive actions (reach, grasp and more). More...

Collaboration diagram for actionPrimitives:

Collaboration diagram for actionPrimitives:Classes | |

| class | iCub::action::ActionPrimitivesCallback |

| Class for defining routines to be called when action is completed. More... | |

| struct | iCub::action::ActionPrimitivesWayPoint |

| Struct for defining way points used for movements in the operational space. More... | |

| class | iCub::action::ActionPrimitives |

| The base class defining actions. More... | |

| class | iCub::action::ActionPrimitivesLayer1 |

| A derived class defining a first abstraction layer on top of actionPrimitives father class. More... | |

| class | iCub::action::ActionPrimitivesLayer2 |

| A class that inherits from ActionPrimitivesLayer1 and integrates the force-torque sensing in order to stop the limb while reaching as soon as a contact with external objects is detected. More... | |

Detailed Description

Abstract layers for dealing with primitive actions (reach, grasp and more).

Copyright (C) 2010 RobotCub Consortium

CopyPolicy: Released under the terms of the GNU GPL v2.0.

Description

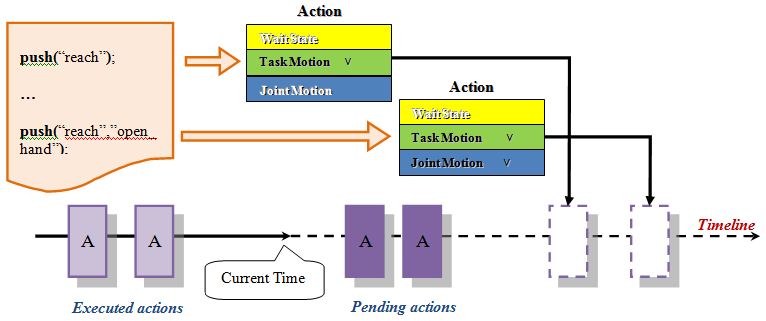

The library relies on the Yarp Cartesian Interface and provides the user a collection of action primitives in task space and joint space along with an easy way to combine them together forming higher level actions (e.g. grasp(), tap(), �) in order to eventually execute more sophisticated tasks without reference to the motion control details.

Central to the library's implementation is the concept of action. An action is a "request" for an execution of three different tasks according to its internal selector:

- It can ask to steer the arm to a specified pose, hence performing a motion in the operational space.

- It can command the execution of some predefined fingers sequences in the joint space identified by a tag.

- It can ask the system to wait for a specified time interval.

Besides, there exists the possibility of generating one action for the execution of a task of type 1 simultaneously to a task of type 2.

Moreover, whenever an action is produced from within the code the corresponding request item is pushed at the bottom of actions queue. Therefore, user can identify suitable fingers movements in the joint space, associate proper grasping 3d points together with hand posture and finally execute the grasping task as a harmonious combination of a reaching movement and fingers actuations, complying with the time requirements of the synchronized sequence.

It is also given the option to execute a callback whenever an action is accomplished.

To detect contacts among fingers and objects the perceptiveModels library is employed.

- Note

- To streamline the code, the context switching of the Cartesian Interface is delegated to the module linking against ActionPrimitives.